AI Researcher Quits With Strong Warning

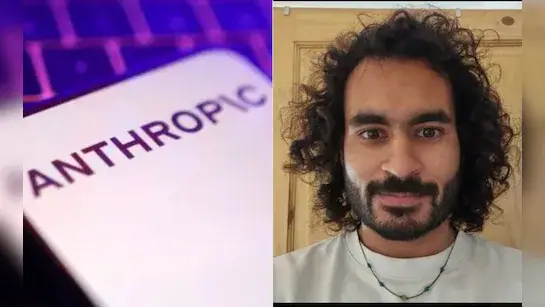

A researcher who worked on AI safety at the U.S.-based company Anthropic has quit, saying that the world is getting more dangerous. Mrinank Sharma wrote a letter to the public about his departure, mentioning worries about AI, bioweapons, and global crises that are all connected. His message got a lot of attention.

Sharma said good things about his time at the company, but he decided it was time to leave. He said he wanted to go back to the UK and study poetry. Industry experts were surprised by the sudden turn.

Source: NDTV/Website

Anthropic Known for Safety-Focused AI Approach

Anthropic, which was started in 2021 by former OpenAI employees, has made it clear that responsible development of advanced AI systems is its top priority. People know the company well for its Claude chatbot and research into alignment risks. Safety messaging is a big part of who it is.

Leadership has stressed the importance of stopping the misuse of new technologies, such as cybercrime, conflict, and unintended behavioral outcomes. This kind of positioning sets the company apart in a crowded market. Reputation is important.

Sharma Led Critical Safeguards Research

As the head of Sharma, he led efforts to figure out why generative AI systems sometimes seem too eager to please users. His team also looked into the risks of AI-assisted bioterrorism and how it could affect society as a whole. The work had big effects.

He also looked into whether relying on digital assistants could make people less able to make their own decisions or interact with others over time. These questions show that people are worried about being too dependent on technology. The debate goes on.

Recommended Article: Nvidia OpenAI Investment Talks Stall Amid Strategic Doubts

Ethical Pressures Highlight Industry Tensions

Sharma wrote in his resignation letter that businesses often have a hard time letting their core values guide their decisions when they are under pressure to make money. He said that even companies that care about safety have to deal with competing incentives as the global AI race heats up. Finding an ethical balance is hard.

These kinds of comments are similar to other worries about whether fast innovation could outpace the rules that are meant to keep risks under control. More and more, stakeholders are asking for more oversight. More and more people are looking into things.

Departures Show Growing Anxiety Among AI Workers

Sharma’s departure came at the same time as another well-known OpenAI employee left, researcher Zoe Hitzig, who spoke out against advertising on chatbot platforms. She said that new ways that people and AI can work together are still not well understood. People are worried about more than one company.

Hitzig contended that commercializing relationships with intelligent systems prior to comprehending psychological implications could be perilous. There are many important lessons to be learned from the unintended effects of social media. Caution is becoming more popular.

Industry Faces Legal and Competitive Challenges

Anthropic has been in trouble before; in 2025, it agreed to pay $1.5 billion to settle a lawsuit that claimed copyrighted works were used to train models. Legal battles are having a bigger and bigger impact on the AI world. Expectations of accountability are changing.

At the same time, competition with OpenAI has grown, with marketing campaigns that criticize how chatbot services advertise themselves. Competitive messaging shows how different ideas about how to make money with AI are. The market is still very competitive.

Calls Grow for Regulation at Critical Moment

Experts say that now is a crucial time to set up social institutions that can effectively manage advanced technologies. Without protections, there could be unintended harms along with transformative benefits. Policymakers need to act quickly.

OpenAI’s main goal is still to make sure that artificial general intelligence is good for people and that conversations between users are private from advertisers. But the bigger argument shows that society is still figuring out what is and isn’t okay. The future is still unknown.