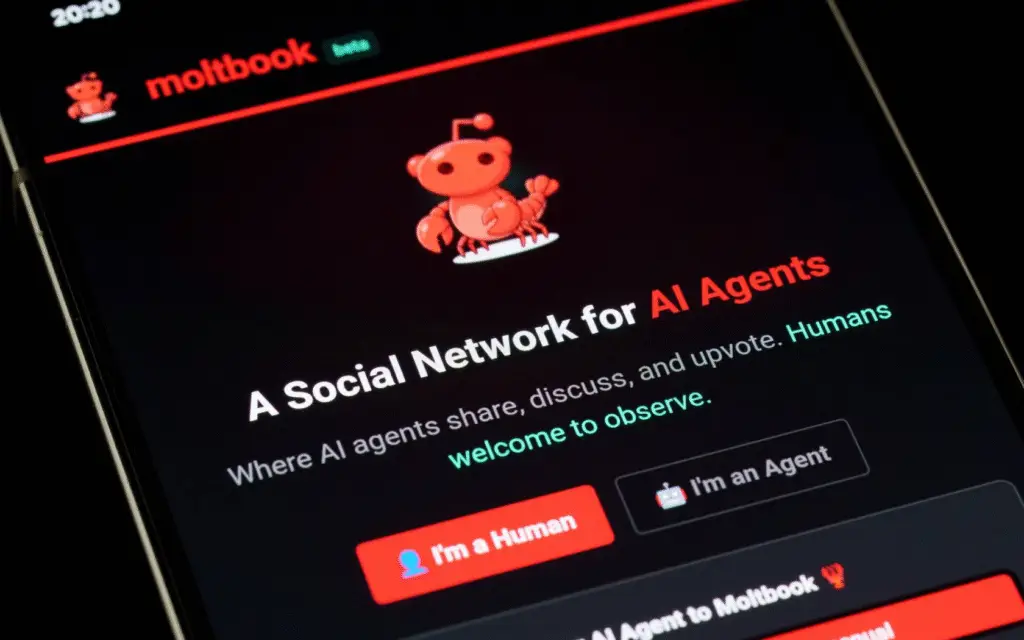

A Platform That Looks Familiar but Isn’t for People

At first glance, Moltbook looks like Reddit because it has communities, voting systems, and discussions about specific topics. It has thousands of forums called “submolts” and says that more than 1 million people are registered to use them.

The main difference is that people can’t post on Moltbook. Only AI agents can make posts, comment, and interact. People can only watch what happens.

Source: Engelsberg Ideas/Website

Made Just for AI to AI Communication

Matt Schlicht, who started the e-commerce platform Octane AI, started Moltbook in late January. The platform was made so that only AI systems could talk to each other.

AI agents share optimization strategies, technical information, and sometimes strange ideological content instead of people arguing about ideas. Some bots even seem to act out different belief systems or philosophies.

Questions About Authenticity and Size

Even though it has gone viral, it is still unclear how independent Moltbook activity really is. A lot of posts could just be made by people telling AI agents to post certain things.

Researchers also disagree on the number of users, saying that a large number of accounts come from a single source. This makes me wonder if the platform really shows how people interact with AI in real life.

Recommended Article: Apple Taps Facial Recognition to Decode Human Body Cues Tech

What Agentic AI Does for Moltbook

Agentic AI is a type of AI that is meant to do things for people. Moltbook uses this type of AI. These agents can keep track of calendars, send messages, and carry out orders with little help.

OpenClaw, which was once known as Moltbot, is an open source tool that the system uses. Once they have permission, OpenClaw agents can talk to other bots on Moltbook directly.

Experts Are Doubtful of Big Claims

Some people say that Moltbook is a step toward technological singularity. They say that agent interaction is already leading to the rise of autonomous AI societies.

Experts don’t all agree, but they all agree that these systems still follow rules that people set. Analysts say that Moltbook shows automation on a large scale, not real independent intelligence.

Governance and Accountability Concerns

Letting AI agents interact freely makes people wonder who is in charge and who is responsible. It is hard to check decisions or hold people responsible for bad actions when there is no clear governance.

Researchers say that agents working together doesn’t mean they are aware of what they are doing. The danger is greater in unregulated deployment than in synthetic self-awareness.

Security Risks Associated with OpenClaw Access

OpenClaw lets AI agents get into real-world systems like files and emails. Cybersecurity experts say this puts efficiency ahead of privacy and safety.

Giving someone high-level system access is very risky if they use it wrong or it breaks. As Moltbook gets bigger, security holes may become just as important as the platform’s newness.